I’m sure you’ve been inundated with articles about the new iPhones 12 this week. I’m not going to do an exhaustive review but I’d like to touch on a couple of points I haven’t seen covered in great detail.

It’s a well-known marketing strategy to create a small, medium, and large version of your product, because it’s believed to help the consumer choose (medium is usually chosen). So when Apple came out with 4 versions of the iPhone, it made choosing much more difficult. Now we have to pay attention to specs and really dig into them to figure out whether we care or not.

LiDAR for Low-Light Focus

One of the features that intrigued me when they announced it was that the iPhone 12 Pro and Pro Max would have a “LiDAR Scanner for Night mode portraits, faster autofocus in low light, and next-level AR experiences”. When the 2020 iPad Pro came out with LiDAR, there was barely a head nod to it, but in the iPhone 12 Pro, it is a much bigger deal.

Augmented Reality seems to be slowly creeping along in capability and popularity but it isn’t a reason to buy a new iPhone or iPad just yet. But it was the faster autofocus in low light that caused my excitement. Apple has made continuous improvements in the iPhone’s ability to capture good photos in low light, and I was also excited that Steve and I would hand down our iPhone 11 Pros to our Lindsay and Kyle because it meant we would get better low light photos from them of our new granddaughters.

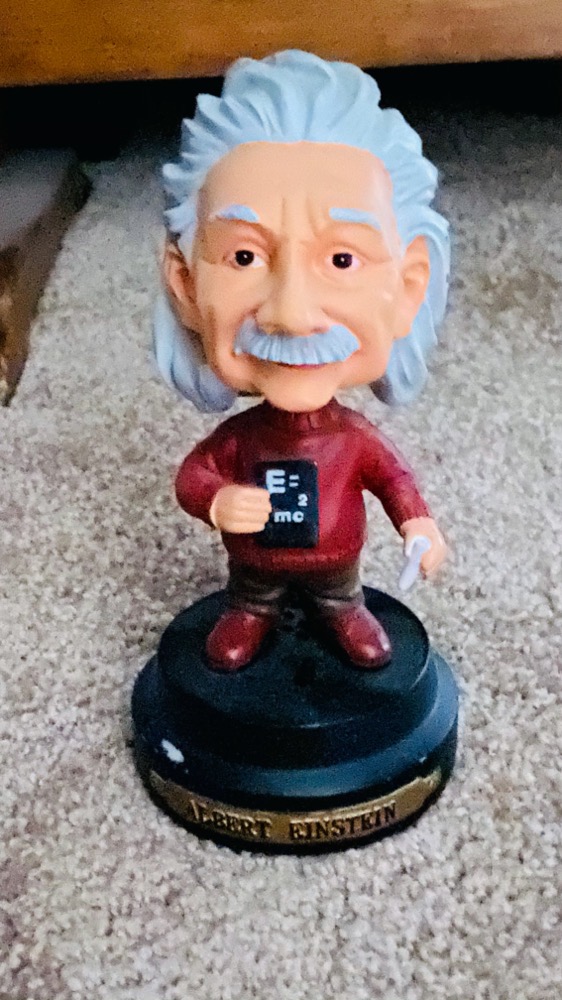

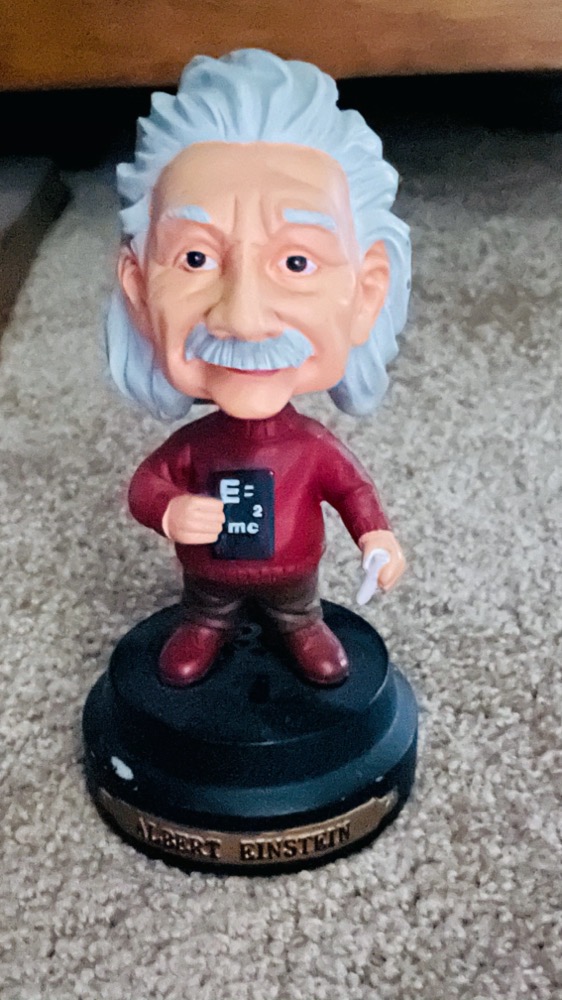

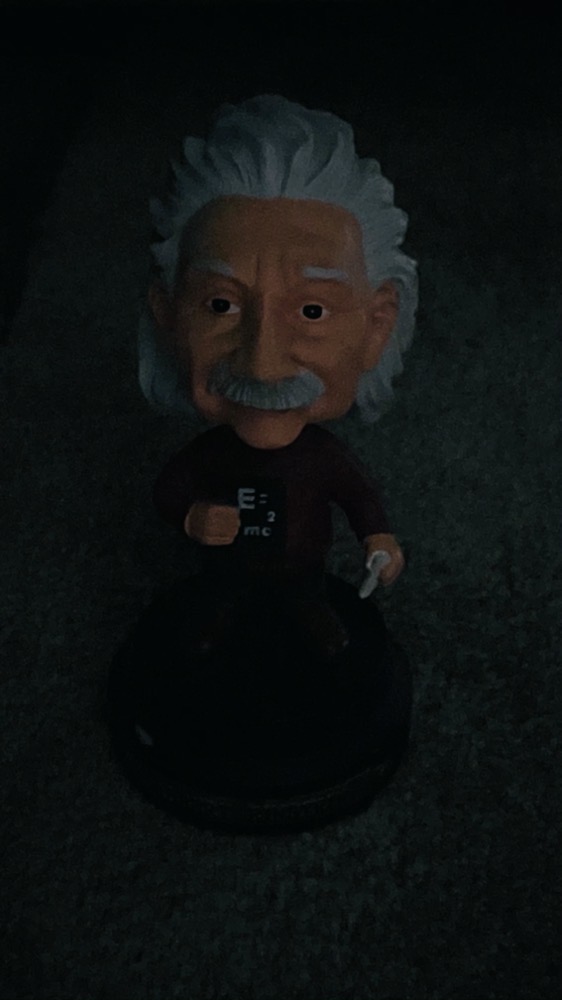

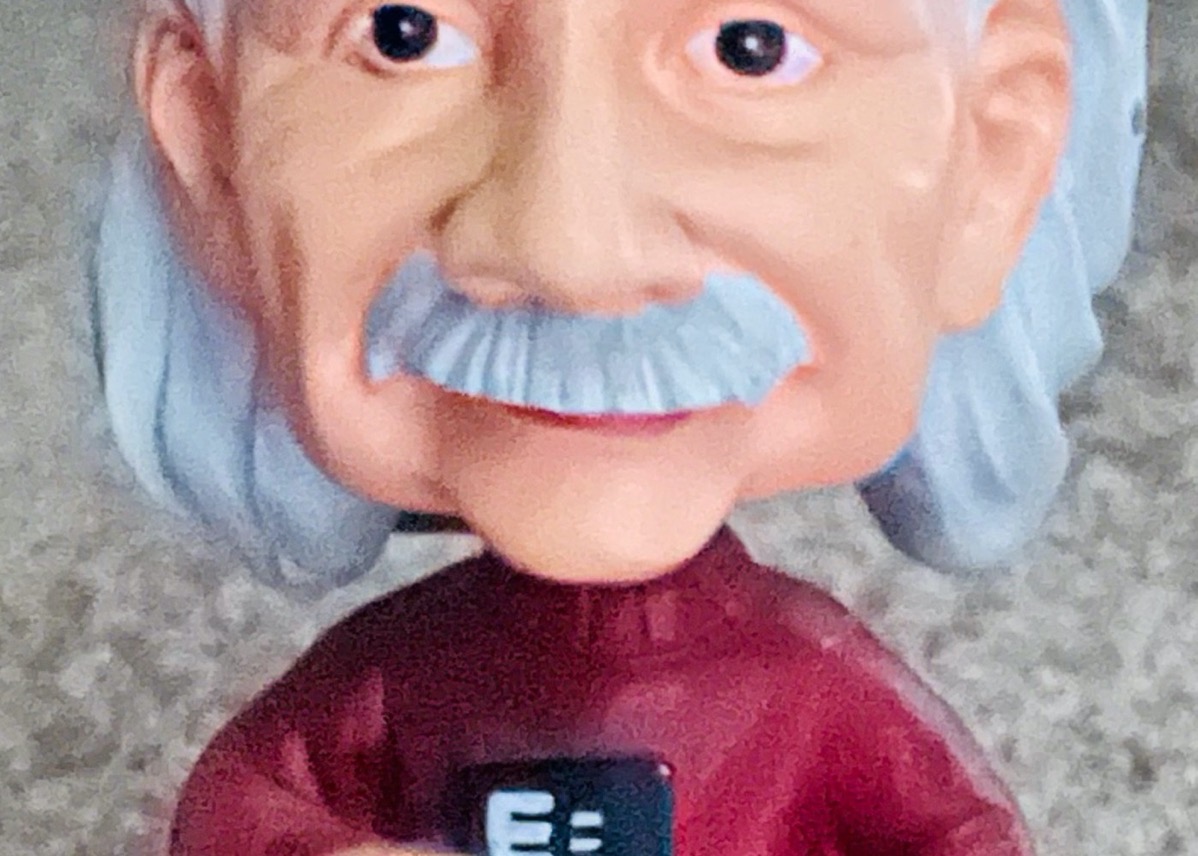

I got the 12 Pro this week and one of the first things I did was go into a dark closet to take some photos with it and the 11 Pro. I chose as my subject my bobblehead Einstein. I pulled the door of the closet about 80% shut and tried to take photos of him with the two phones.

My first observation is that the iPhone 11 Pro had trouble focusing on Einstein and even though I was tapping directly on him to tell it where to focus, I still got a couple of blurry images. On my third try, I was able to coerce it into successfully focusing on the bobblehead. The iPhone 12 Pro had absolutely no trouble focusing on Einstein and captured him easily every time. The reason the 12 Pro could focus in near darkness and the 11 Pro struggled is probably because of the LiDAR in the 12 Pro. It seems that the hype lives up to reality.

Now let’s talk a bit about how Night Mode on iPhone 11 and 12 works. When Night Mode is enabled, iPhone calculates how many one-second-long exposures it will need to combine together to get enough light to make a good image. If you’re hand holding the phone, it won’t take more than 3, but if you use a tripod it will sense that you’re not moving and allow upwards of 20-30 one-second exposures to be combined.

You can drag a slider to change how many one-second exposures the camera takes and combines to try to get your desired effect. After I was able to capture two good photos of Einstein with the two phones, on the 12 Pro I moved the slider to zero seconds so I could see what a “normal” photo would like in this level of darkness.

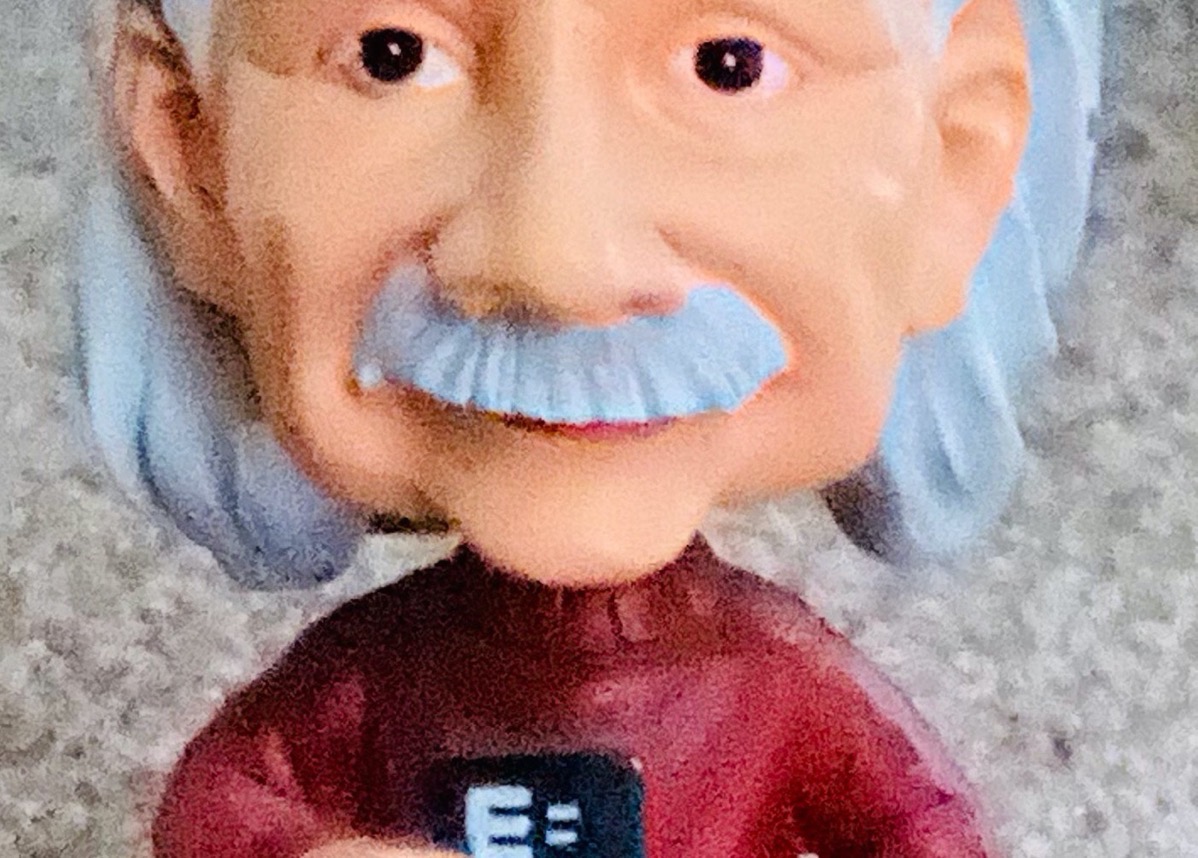

The normal photo really illustrates how dark the closet was when I was taking the photo, and both the 11 and 12 Pro cameras’ Dark Mode did an amazing job of capturing his features in that challenging condition. Without zooming in at all, the iPhone 11 Pro image of Einstein looks good, but it looks kind of soft.

In the shownotes, I cropped in tight on Einstein’s face so you can see exactly why. The 11 Pro image is noisier than one taken by the 12 Pro.

The LiDAR in the 12 Pro is what allowed it to achieve a good focus where the 11 Pro struggled, but the reduction in noise is achieved through different technology. The iPhone 12 Pro has a wider aperture on what they call the Wide lens (which is really the normal lens). The 12 Pro’s Wide lens’ aperture is f/1.6 where the 11 Pro is f/1.8 (a smaller number means a bigger aperture). The wider aperture on the 12 Pro means that it lets in more light, and more light means less noise in the final image. In addition, the 12 Pro has an improved image signal processor and has a fancier HDR algorithm (now called “Smart HDR”).

Dolby Vision HDR

Speaking of HDR, let’s flesh out what high dynamic range actually is a bit before we talk about video. When we look at a bright blue sky with white, puffy clouds and a shadowed barn in front of dark mountains, our eyes can see the entire range of light from bright to dark all at once, but camera sensors struggle with this. They can expose for the bright sky and clouds, but they lose the detail in the barn and mountain. Or they can expose for the dark areas and the sky and clouds get blown out and lose their detail.

This is because the sensors don’t have the bit depth to achieve both at the same time. This can be overcome with two different methods – increasing the bit depth and capturing images exposed at different levels of brightness and then combined.

In the iPhone 11 Pro, Apple used the neural engine to simulate an extended dynamic range. With all four models of the iPhone 12, we get the option to enable true HDR video at up to 60 frames per second (depending on model and your choices) and the video is in 10-bit dynamic range using the proprietary Dolby Vision.

I wanted to see what the real-world difference between these two methods would be. Since I don’t have a dark mountain with white puffy clouds to film, I picked an even more difficult setting. One of the hardest things to record is a computer or phone screen and anything else at the same time. Normally you have to lower the brightness on the display in order to have any hope of seeing anything onscreen while viewing the surroundings.

I took a short video of my desk where I moved from looking at my 5K screen and my MacBook Pro monitor to looking at the shadows underneath them. I recorded with the 12 Pro and then the 11 Pro and I had them both record at 60 frames per second in 4K. If you watch the videos on any device you can see a big difference, but you would only be able to appreciate the full beauty of the 4K HDR iPhone 12 Pro if you played it back on an HDR-capable device like a newer TV or an iPhone 12 Pro.

The videos are also huge files even though they’re under 20 seconds. For that reason, I dumbed them down to 720p, which won’t show off the beauty of the Dolby Vision HDR but it will show you how the machine learning algorithms used on the iPhone 11 Pro aren’t nearly as good as true 10-bit HDR recording. The biggest thing you’ll notice is that when using the iPhone 11 Pro and I move to look mostly at a screen, the exposure is adjusted downwards very quickly to expose it properly, and dramatically increases the exposure when I move to record the shadows.

During those transitions, especially going from the screen to the shadows, you’ll see the screen blow out. The effect is rather jarring and not at all smooth. In contrast, the iPhone 12 Pro maintains a fairly consistent exposure throughout the videos.

At the very end of the video from the 11 Pro you can see the screen of my MacBook Pro completely blown out when the camera properly exposes my mic and a shadowed chest of drawers behind it. In case you don’t want to waste bandwidth watching the videos, I’ve included the final frame from the two cameras to illustrate how well the iPhone 12 Pro’s HDR does in comparison.

Moving Cat with Night Mode

This final part of my observations of the iPhone 12 Pro camera might have fit better where I was talking about how Night Mode works, but I can’t quite explain what the camera did exactly so I’m making it more of a footnote.

As I explained earlier, Night Mode on the 11 and 12s takes a series of one-second exposures and stacks them in-camera to create a single well-exposed image. I’ve noticed that this technique seems to work even if something in view moves during capture of the image. I was able to prove this the other night when I had two cats as subjects.

One cat was sitting on the recliner with me and never moved. The second cat was sitting on the coffee table a foot or so away. When I took the shot, Night Mode chose 3 one-second exposures, and while I was waiting the 3 seconds, the cat on the table first turned her head and then jumped entirely off of the table.

The resultant picture has the close, stationary cat perfectly exposed, and perfectly focussed with a high level of detail in her fur. The distant cat who moved during the capture is frozen in the position where she was when I started the capture, but she’s kind of blurry and is showing a lot of noise compared to the close cat.

The EXIF data on the image says that this was exposed for 1/29th of a second at ISO 1000 at f/1.6. I have no idea how it created this image. It may have simply discarded all of the images except the first before the second cat moved, or maybe the algorithm is smart enough to actually keep what didn’t move from all of the exposures and discard what changed.

If it only kept the first exposure, I wouldn’t have expected the foreground cat to be so well-exposed and with low-noise, and yet what kind of sorcery would be required to track what changed and discard it while keeping the rest of the image? I suppose that 6-core A14 Bionic chip and the 4-core GPU have to have something to do, but wow that’s crazy if it did this.

Bottom Line

The bottom line is that while the iPhone 11 Pro camera is pretty darn amazing, I can definitely see a difference in low-light photography and in video with the new HDR mode. And if someone can explain the cat photo, I’m all ears!